Understanding network and remote servers: IP/URL, ssh, scp, wget, curl

In computing, a network is simply a network consisting of computers and other devices; routers, gateways, modems etc.. These computers are either desktop pc's, which is the type that you're using, or they're server computers. The internet in its entirety is in fact an example of humongous network of global proportions, so as a short introduction, watch this short and simple video explaining the internet What's the internet.

Computers and other devices within the internet have addresses called IP addresses that have the syntax x.x.x.x, where x is a number between 0 and 255. There are 2 types of IP addresses; private and public. The difference is in what numbers are used. Private IP address are 'private', which just means that they're not directly connected to the internet. You can check your computers IP by typing,

Prompt$ ifconfig

which will display information on the IP addresses currently in use by your computer. For windows users, your IP address should be under 'wifi0' or 'eth0'. The terms 'wifi0' and 'eth0' mean that you're connected to the internet via wifi and LAN cable respectively. For Mac users it should be one of the IP addresses next to inet. The 'lo: inet 127.0.0.1' is your machines loopback address and is not your private IP address. For more information on finding your IP address on a MAC, you can follow this link Finding your IP address on Mac OS.

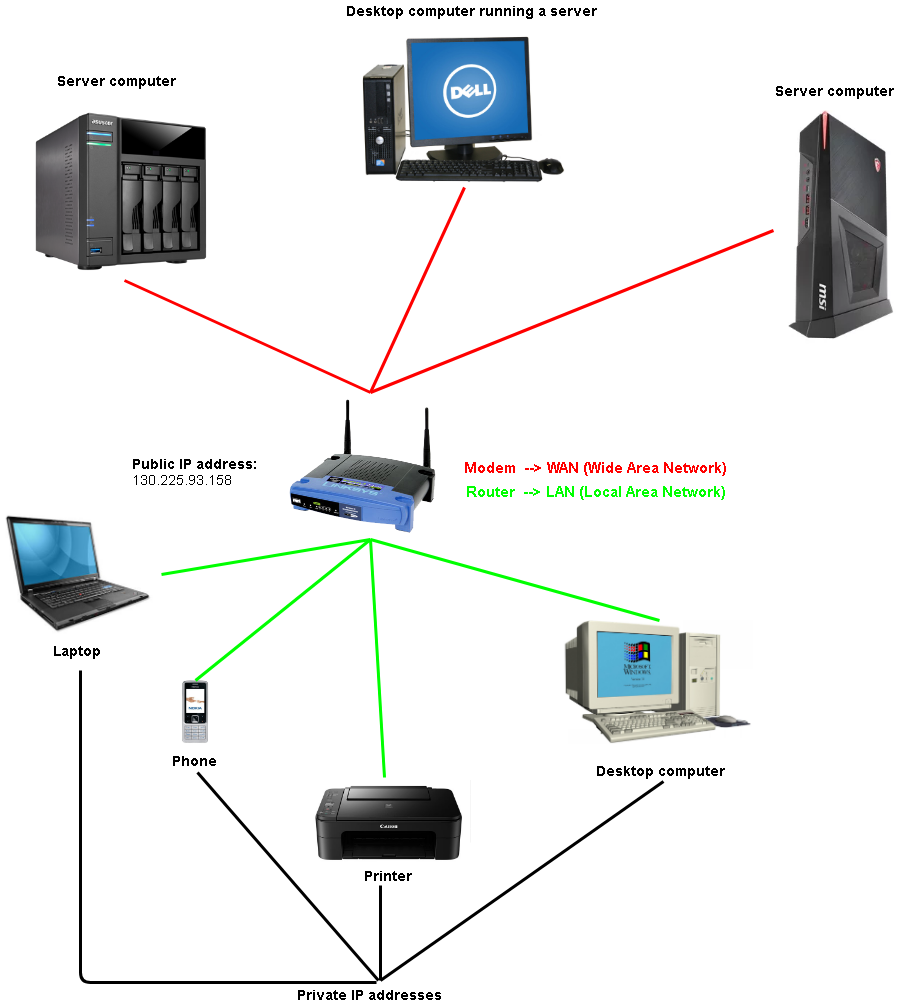

Public IP addresses connect you with the rest of the internet. Typically, public IP addresses belong to a modem/router, which you can simply think of as a frontline device that connects all of your private devices to the rest of internet. Devices that are connected to the same modem, will all share the same public IP address and because public IP addresses are 'public' they must be unique. This is not the case for private IP addresses, because they aren't directly connected to the internet. The term for a network consisting of public IP addresses is called 'World Area Network' (WAN), which is represented with a red line in figure 7.1. Just so you know, figure 7.1 has been simplified so that the router for the server computers aren't included. You can look up your public IP address by following this link Public IP address. But you can also find your public IP address by using wget,

Prompt$ wget -qO- ifconfig.me

The command option q is short for quiet mode, and -O- ensures that the output is written to stdout. The Mac OS is not equipped with wget by default, but you can instead use the command curl,

Prompt$ curl ifconfig.me

You shouldn't get too attached to your IP addresses, as they can easily change. IP addresses are determined by your internet service provider (ISP), hence, it'll change depending on the WiFi you're logged onto, when you unplug and replug your router or perhaps if your ISP decides to change it.

We briefly mentioned something about using a LAN cable, but we haven't defined what LAN is. LAN is short for 'Local Area Network (LAN)', and you can think of it as the network connecting your private devices. In figure 7.1, this is depicted as the devices connected by the green line; printer, phone and computer. The device that allocates private IP addresses to your 'private devices' while also establishing connectivity between these devices, is your router. Often, it is the case that the modem and router are combined into one device, which is how it's illustrated in figure 7.1. For more information on the difference between router and modem, follow this link Modem vs router

The IP addresses we've been discussing so far are IPv4 addresses. The amount of possible IPv4 addresses is approximately 4.3 billion, which was a sufficient amount for some time. However, it very quickly became clear that it wasn't, therefore, the next version of IP addresses, IPv6, has 7.9x10^28 as many IP addresses as IPv4.

Servers and URLS

A 'server' is simply a computer connected to a network that provides 'services' to other computers. The computers that the server provides services for are called clients. This kind of relationship between servers and clients is what's defined as the 'client-server model'.

A simple example of a server that you're likely familiar with are print servers. Print servers are servers that provide the service of printing for computers connected to the same network. In a 'client-server model', the connected computers are the clients and the print server is the server. A print server would typically be what's called a 'local server'. Servers can be distinguished into local and remote servers, the only difference being that local servers are setup to be within a LAN (so within the green lines in figure 7.1) and remote servers are setup on another 'remote computer' (the servers connected by the red line in figure 7.1). It goes without saying, that remote servers are found everywhere on the internet, because that's largely what the internet is made of. There are different type of servers, but you're probably most familiar with web servers like (wikipedia, google, youtube, facebook etc..). Web servers are available to clients through the use of a web browser (Google Chrome, Internet Explorer etc.) and a URL, which is likely the only way you've been accessing them up till now. You can, however, also interact with them in a little more 'old school way' through the command line interface. For example, in the section 'Standard streams and working with files', we used wget to retrieve files from URLS.

URL is short for 'Uniform resource locator', and they're are used to identify websites. A URL consists of several parts; 'protocol', 'sub domain', 'second level domain', 'top level domain', 'directory/folder', 'filename/webpage', and 'file extension'. Let's go through what each part represents by using a wikipedia URL as an example.

https://en.wikipedia.org/wiki/Elon_Musk

- https --> protocol.

- en --> subdomain.

- wikipedia --> second level domain.

- org --> top level domain.

- wiki --> folder/directory.

- Elon_Musk --> webpage.

The protocol used is https short for 'Hypertext Transfer Protocol Secure'. This is the protocol that your computer uses to retrieve data securely from your browser (google chrome, internet explorer etc.) corresponding to the URL. Protocols are a different topic, and we'll talk more about that later. Before 'https', the protocol used was 'http' but due to problems with data insecurity, there's a gradual increase in the use of the 'https' protocol. In short, 'https' ensures that the data received from your browser is encrypted and you can read more about this by following this link. But not all websites use it, for instance, this very website is using the 'http' protocol for interaction with browsers.

Following 'https', there can be a subdomain and in this case it's en. The subdomain can be called just about anything but the most commonly used is 'www'. You don't actually have to add a subdomain but what's important, is that one of the 2 URLS, one with a subdomain and the one without, redirects to the other in order to avoid duplicate versions of the URL. For example, if you write 'https://wikipedia.org/wiki/Elon_Musk' in your browser, you will be redirected to 'https://en.wikipedia.org/wiki/Elon_Musk'. Next, wikipedia is the second level domain. Along with the top level domain, the second level domain make up the domain name of URL which is what makes the URL unique. The top level domain in this URL is org, which is short for organization. A more commonly used top level domain you're familiar with is 'com', short for commercial. The folder/directory is wiki. This is followed by the webpage Elon Musk. In this case, there's no file extension but in previous sections we've been using URL's, https://teaching.healthtech.dtu.dk/material/unix/ex1.acc, where the filename is 'ex1' and the file extension is 'acc'.

You can find the IP address of a domain, by using the command nslookup,

Prompt$ nslookup <Domain_name>

would output the IP address along with some additional information to your terminal. For example, if you typed

Prompt$ nslookup wikipedia.org

you would get the IP address 91.198.174.192. The command, nslookup, actually receives this information from what's called DNS (Domain name system) servers. DNS servers are simply systems that store URL's with their corresponding IP addresses, ensuring that you're brought to the right IP address when you use a URL.

Another useful command is ping, which is used to check network connectivity between host (your computer) and another host or server. In simple terms, it sends a data packet to the specified IP or URL with the message "PING" and waits for a response. The response time from the host/server is called latency. High latency and slow ping are what causes 'lagging' in online computer games or maybe just some really slow websites. Conversely, low latency and fast pings ensure enjoyable gaming and web browsing. You can check the ping of a website by typing,

Prompt$ ping <IP/HOSTNAME>

in your terminal.

We've already used wget in an earlier section to download datafiles, but here we'll go more into detail with some of its options. Essentially, wget allows you to download files from servers without being logged into that server. If you're using a MAC OS, wget won't work, but you can use curl instead.

Prompt$ wget <URL>

will download files from the server specified by the URL, as long as it doesn't require any sort of login. You can try this out yourself for any URL, but the content of the files you download might seem a little strange, if all you're downloading is a website. Websites are written in 'html', a programming language you might not be familiar with.

If you need to download a big file, you can run the download as a background process.

Prompt$ -b wget <URL>

will download the URL as a background process, allowing you to do other work within the shell as you wait.

If you're download was interrupted for some reason, you can resume the download of the partially downloaded file using the c option

Prompt$ -c wget <URL>

will resume the download of the file from the URL.

The command curl is quite similar to wget, there are some differences however. The difference are summed up nicely in this link Curl vs wget.

Similar to wget you can download a file from a URL,

Prompt$ curl <URL>

will download files from the server specified by the URL.

Multiple files can be downloaded with the syntax,

Prompt$ curl http://website.{URL_1, URL_2, URL_3}.com

will download from the URLS; URL_1, URL_2 and URL_3.

If you need to download a series of files,

Prompt$ curl ftp://ftp.something.com/file[1-20].jpeg

will download the file[1-20]. Here we using the ftp protocol.

You can save the content of the URL to a specific file on your computer,

Prompt$ curl -o <FILE> <URL>

will download the content from the URL and save it as <FILE>

In networks, we distinguish between webpages and websites. A website is a URL that can contain a multitude webpages all under the same domain. So examples of a websites and webpage could be https://wikipedia.org and https://wikipedia.org/wiki/Elon_Musk respectively. Websites like Wikipedia, Netflix, Google etc., all have some server computer connected to the internet that provides informational data to clients. There are many other types of servers as well; Mail servers, data servers, FTP servers, proxy servers, chat servers etc.. If you're interested in these other types of servers you can read more about them by following this link, Different server types.

We defined a 'server' as a computer connected to a network that provides 'services' to other computers, which essentially means that any computer could be made into a server. That being said, however, there are actually computers that are designed especially to be servers. These type of computers are called 'server computers'. They have different different specs then normal computers and are designed to operate many clients to be operated simultaneously. They also typically have a lot of hardware redundancy; 'RAID disk systems', 'ECC memory' and 'dual power supply, which ensure that if one part server breaks the server can continue working without crashing. However, a server doesn't have to be run by a server computer and you can just as well a run a server on a desktop computer. It really depends on the scale for which the server is going to be used. Finally, most servers do not use GUI (Graphical User interfaces) and can only be operated through CLI (Command line interface), which is one of the main reasons why you've been learning to become efficient with CLI's. You might be wondering why don't servers use graphical user interfaces, as it can't be that hard to implement and then you wouldn't have to take this course. There's actually a very good reason for this. Applications, hereunder graphical user interfaces, make servers more susceptible to security breaches, which could allow uninvited guests (hackers) inside the server. Hence, servers are normally designed to be as simple as possible, while also giving utility to the intended user.

Protocols and ports

IP addresses play a central role in connecting your computer to the internet and they ensure that your requests go to the right place while also ensuring that information is returned correctly. In order to make sure that you can access the internet efficiently, something called protocols is used. You don't need a deep understanding of protocols unless you're planning to become a web developer. Simply put, protocols are a standard set of rules that dictate how computers are to communicate efficiently across a network. A protocol that you're likely familiar with is 'http', short for 'hyper text transfer protocol', which is the protocol that your browser uses for extracting data from a website. Protocols use something called ports, which you can think of as a door from which data can go out and in. There are 65535 ports in total, and port numbers ranging from 0-1023 are considered system ports which are the ones that the most common protocols use. The port numbers typically used for HTTP, SMTP (Simple Mail Transfer Protocol) and FTP (File Transfer Protocol) are ports; 80, 25 and 21. Keep in mind, however, that there are alternative ports for most protocols, and you can in fact use any port number as long as it isn't assigned to another protocol. The port numbers ranging from 1024-65535 are called dynamic ports and they're usually assigned as needed. Exactly what 'assigned as needed' means can be illustrated with an example. Imagine you've connected to a web server through port 80 using the http protocol and you're waiting for the web server to respond and send you your data. If there are few people using the server, you might get this data sent back through port 80. However, if there are many using the server, port 80 might not be available. If this is the case, the web server will assign whatever port is available to send back the requested data. This type of assignation of ports is the most common use for dynamic ports, however, assignation of dynamic ports also happen when you install a new application that uses a dynamic port. Let's get into how we can use commands like telnet and ssh to connect with servers. Till now, you've likely only been connecting with remote servers through the use of browser applications like Google chrome, Mozilla Firefox, Safari, etc.

Prompt$ telnet <URL> <PORT>

will connect you to the specified <URL> using the specified <PORT>. For example, you can connect to a gmail using the 'smtp' protocol and port 465.

Prompt$ telnet smtp.gmail.com 465

Writing mails with telnet is technically possible but difficult to do and we won't be bothering with trying. Practically, telnet is mostly used to troubleshoot whether the connection of your computer to a server is working properly.

The commands ssh and scp, short for secure shell and secure copy, are commands that establish secure connections to remote servers. The command ssh sets you up with a shell environment at remote server, allowing you to do work there. The command scp, allows you to copy files to and from a remote server. As a quick introduction, this tutorial tells you almost all you need to know about ssh and scp, Tutorial video on using ssh and scp.

To establish a connection to a remote server in a secure shell environment with port 443,

Prompt$ ssh -p443 username@x.x.x.x

where 'username' is the username you're using on the remote server with the IP address 'x.x.x.x'. Port 443 is the port normally for 'secure web browser communication', and data transferred across this port highly resistant to interception. There's no specific reason to why we're using it here and it's just to show that you can specify with port you would like access remote servers with.

To copy a file from a remote server to your device with port 443,

Prompt$ scp -p443 username@x.x.x.x:Directory/to/the/file/file.txt /mnt/c/Users/Username/Desktop/My_working_directory

where 'filepath/to/the/file/file.txt' is filepath leading to the location of the file on the remote server and /mnt/c/Users/Username/Desktop/My_working_directory is filepath to where the file is copied to.

Oppositely, you can copy a file from your device to the remote server with port 443,

Prompt$ scp -p443 /mnt/c/Users/Username/Desktop/My_working_directory/file.txt username@x.x.x.x:Directory/to/the/file

From web server to your computer display (optional)

Here we give a explanation of exactly how information is transferred from a web server and displayed on your computer. Understanding this in detail is optional, and it's really just placed here for your curiosity.

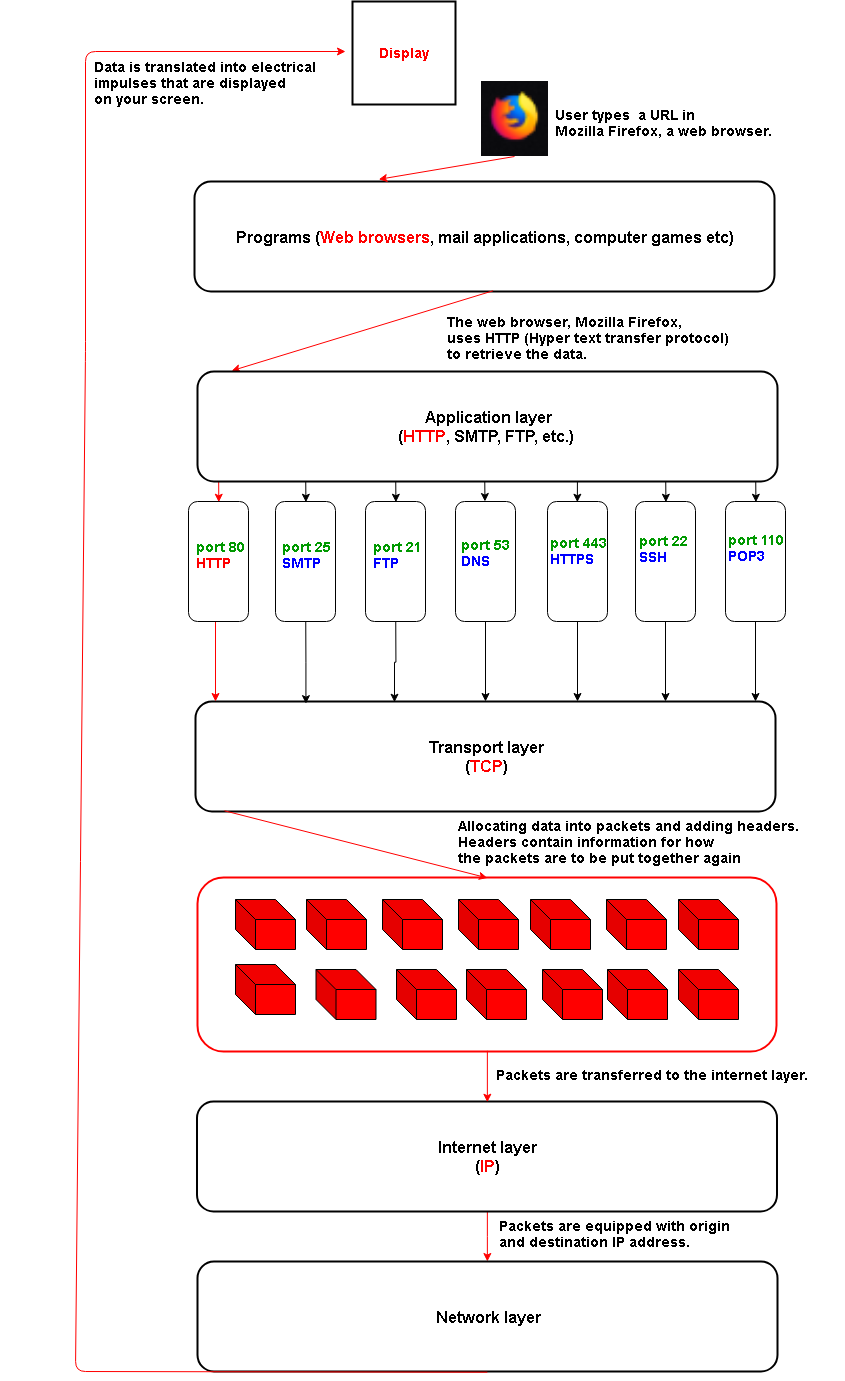

This link Introduction to protocols will guide you to an introductory video on protocols. In the video, protocols are explained in layers and so to be consistent we'll do the same. There's also a small mistake in the video, as the 'SMTP' (simple mail transfer protocol) is not used for checking mail but only for sending mail. For checking mail other protocols like POP (Post office protocol) and IMAP (Internet message access protocol) are used.

- Application layer (HTTP, SMTP, FTP) and ports

In the application layer, protocols like HTTP (Hyper text transfer protocol) receive data from the program that you're using. In the case of HTTP, the data would originate from your web browser, but in the case of SMTP the data would originate from a mail application. After having received the data from the program you're running, the application layer will send the data through a port to the TCP (Transmission control protocol). There are 65535 ports in total, and port numbers ranging from 0-1023 are considered system ports which are the ones that the most common protocols use. Port number typically used for HTTP, SMTP and FTP are ports; 80, 25 and 21. Keep in mind, however, that there are alternative ports for almost every protocol, and you can in fact use any port number as long as it isn't assigned to another protocol.

- Transport layer (TCP)

In the Transmission control protocol (TCP), the data received from the application layer is allocated into what's called 'packets', which you can think of as small bundles of data. By allocating the data into packets, this allows for the data to be transported as fast as possible to ultimately needs to go. For the data to be put back together properly after having arrived at its destination, TCP equips each packet with headers which contain instructions for how to put the packets together. First, however, these packets go through the internet protocol (IP).

- Internet layer (IP)

The Internet Protocol (this is what the 'IP' in 'IP address is short for) ensures addressing, delivering and routing your requests correctly. The packets that it receives from the transport layer are equipped with both origin and destination IP address. This ensure that the packets know where they need to go, and that the receiving device knows where the packets came from. Next, the packets go through the Network layer.

- Network layer

Among other things, the network layer handles 'Mac addressing', which ensures that the data from the packets are converted to electrical impulses and ensuring that they're delivered to the right device in the right places.

Command list

Here we present all the commands used in this section.

| Unix Command | Acronym translation | Description |

|---|---|---|

| wget [OPTION] <URL> | web get | A non-interactive network downloader used to download files located at the URL. |

| curl [OPTION] <URL> | Client url | Similarly to wget, it is used to download files at the specified URL. This is an alternative MAC OS users, where wget doesn't work. |

| ssh <PORT> <user@IP/Domain_name> | Secure shell | Used to establish a secure connection to a remote server/system. It's also known as secure shell protocol. |

| scp <PORT> <user@IP/Domain_name> <user@IP/Domain_name> | Secure copy protocol | Starts a secure copy protocol, which copies files securely across from remote networks to clients or from clients to remote networks. |

| telnet <URL> <PORT> | Teletype network | Establishes a connection with the specified URL and port. |

| ifconfig [OPTION] | Interface configuration | Displays currently active networks but when used with a, it displays the status of all networks. |

| nslookup [OPTION] | Name server lookup | Used to obtain information about a server through a DNS (Domain name system) server. |

| ping [OPTION] <IP/URL> | Packet Internet Groper | Checks network connectivity between host (your computer) and host/server. |

Exercises 1: Using ssh and scp

In order to use the commands ssh and scp you need to actually have a remote server you can try it on. You can create a SSH server on your local server (so on your own computer), and although this is hardly a remote server this will allow you to try the commands ssh and scp. For windows users, this require a couple more steps.

- For windows users

First make sure ubuntu is updated,

Prompt$ sudo apt-get update Prompt$ sudo apt-get upgrade

Then install ssh client and server,

Prompt$ sudo apt-get openssh-client Prompt$ sudo apt-get install openssh-server

You should now be able to start a ssh server,

Prompt$ sudo service ssh start Prompt$ ps -A

You should be able the daemon process, 'sshd', up and running. You can stop it again by typing,

Prompt$ sudo service ssh stop

- For Mac users

Prompt$ systemsetup -setremotelogin on

You should now be able to start a ssh server,

Prompt$ sudo service ssh start

You can view check if the ssh server is up on and running with, ps -A,

Prompt$ ps -A

where you should be able the daemon process, 'sshd', up and running. You can stop it again by typing,

Prompt$ sudo service ssh stop

By default the port number that your ssh server uses, is port number 22. You can, however, change this by going to the file <ssh_config>

Prompt$ sudo vim /etc/ssh/ssh_config

- Now that you're setup with an ssh server, start it and connect with ssh (whenever you want to exit the remote server, simply type exit in the command prompt).

- Copy any file from your computer to somewhere on the server.

- Copy any file from the remote server to your home directory.